Building Your Own ChatGPT for $100: A Complete Tutorial

- Published on

- /11 mins read

Introduction

Status: Draft

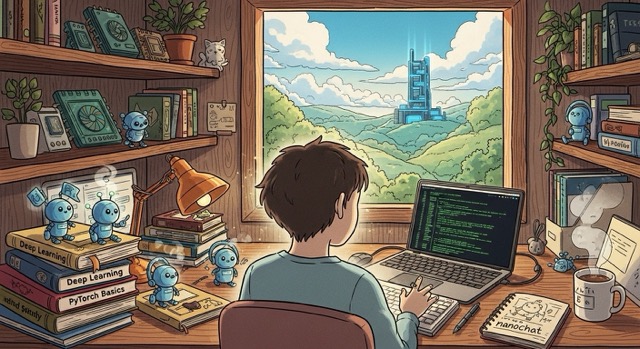

What if I told you that in the next 4 hours, you could train a complete ChatGPT-like system from scratch for just $100? Not a toy demo. Not a fine-tuned wrapper around someone else's model. A real, end-to-end language model trained from raw text to conversational AI.

This tutorial walks you through nanochat, Andrej Karpathy's minimal implementation of the full LLM pipeline. By the end, you'll have built and deployed your own AI assistant that can hold conversations, solve math problems, and even generate code.

What You'll Build

By following this tutorial, you'll create:

- ✅ A custom BPE tokenizer trained on 2 billion characters

- ✅ A 561M parameter transformer pretrained on 11.2B tokens from scratch

- ✅ A chat model fine-tuned through midtraining → SFT → RL

- ✅ A web interface for talking to your model (ChatGPT-style UI)

- ✅ Complete evaluation reports across standard benchmarks

The Investment

What it will cost you:

- ~

100 in GPU time (4 hours on 8xH100 at24/hour) - An afternoon of your time

- Zero prior LLM training experience required (but PyTorch familiarity helps)

What your model will be capable of:

- Basic conversations and creative writing

- Simple reasoning and question answering

- Tool use (calculator integration)

- Math word problems (to a limited degree)

- Code generation (very basic)

Think of it as "kindergartener-level intelligence"—not going to replace GPT-4, but fully functional and a complete demonstration of how modern LLMs actually work.

Prerequisites & Setup

Technical Requirements

Before diving in, you should have:

- Familiarity with PyTorch and transformer models

- Basic command-line skills (SSH, screen, bash)

- Understanding of language modeling concepts

Compute Requirements

You'll need access to powerful GPUs:

- Recommended: 8x H100 GPUs (80GB each) for the $100/4-hour run

- Alternative: 8x A100 GPUs (slightly slower, ~5-6 hours)

- Budget option: Single GPU (works fine, just 8x longer: ~32 hours)

Where to get GPUs:

- Lambda GPU Cloud - ~$24/hr for 8xH100

- Vast.ai - Variable pricing, potentially cheaper

- RunPod - Good UI, competitive pricing

Cost Breakdown

| Component | Time | Cost (8xH100 @ $24/hr) |

|---|---|---|

| Tokenizer training | ~20 min | ~$8 |

| Base pretraining | ~2 hours | ~$48 |

| Midtraining | ~30 min | ~$12 |

| Supervised fine-tuning | ~1 hour | ~$24 |

| (Optional) RL training | ~30 min | ~$12 |

| Total | ~4 hours | ~$100 |

Expected Performance

Your trained model will achieve approximately:

| Benchmark | Score | What it measures |

|---|---|---|

| CORE | 22.19% | Language understanding (GPT-2 is ~29%) |

| MMLU | 31.51% | Multiple-choice reasoning (random is 25%) |

| ARC-Easy | 38.76% | Elementary science questions |

| ARC-Challenge | 28.07% | Harder science reasoning |

| GSM8K | 7.58% | Math word problems (with RL) |

| HumanEval | 8.54% | Code generation |

Getting Started

Step 1: Provision Your GPU Node

Using Lambda GPU Cloud:

- Sign up at lambda.ai/service/gpu-cloud

- Click "Launch Instance"

- Select: 8x H100 SXM (80 GB)

- Choose Ubuntu 22.04 LTS

- Add your SSH key and launch

Step 2: Connect and Clone

ssh ubuntu@<your-instance-ip>

# Verify GPUs

nvidia-smi

# Clone nanochat

git clone https://github.com/karpathy/nanochat.git

cd nanochatThe entire codebase is just ~8,300 lines across 44 files—minimal and readable.

Step 3: (Optional) Enable Logging

For experiment tracking with Weights & Biases:

pip install wandb

wandb login

export WANDB_RUN=my_first_chatgptStep 4: Launch Training

Run the complete pipeline:

screen -L -Logfile speedrun.log -S speedrun bash speedrun.shScreen tips:

Ctrl-a d- Detach (training continues)screen -r speedrun- Re-attachtail -f speedrun.log- Monitor progress

The Training Pipeline

The script automatically executes:

- Install dependencies (uv, Rust)

- Download FineWeb data (~24GB)

- Train BPE tokenizer (~20 min)

- Base pretraining (~2 hours)

- Midtraining (~30 min)

- Supervised fine-tuning (~1 hour)

- Generate evaluation reports

Stage 1: Tokenizer Training

What Happens

Builds a custom BPE tokenizer with 65,536 tokens, trained on 2 billion characters from FineWeb.

Special tokens for chat:

SPECIAL_TOKENS = [

"<|bos|>", # Beginning of sequence

"<|user_start|>", # User messages

"<|user_end|>",

"<|assistant_start|>", # Assistant responses

"<|assistant_end|>",

"<|python_start|>", # Tool use (calculator)

"<|python_end|>",

"<|output_start|>", # Tool output

"<|output_end|>",

]These tokens structure conversations and enable tool use during inference.

Stage 2: Base Pretraining

The Core Training

Trains a 561M parameter transformer from scratch on ~11.2 billion tokens.

Model architecture:

- Parameters: 561M

- Layers: 20

- Attention heads: 6

- Embedding dimension: 768

- Sequence length: 1024 tokens

Key features:

- Pre-norm architecture for stability

- Rotary position embeddings (RoPE)

- Multi-Query Attention (MQA) for faster inference

- ReLU² activation

- RMSNorm without learnable parameters

Dual Optimizer Innovation

Uses two optimizers simultaneously:

- Muon (MomentUm Orthogonalized by Newton-schulz) for 2D matrix parameters

- AdamW for 1D parameters (embeddings)

This approach provides better gradient flow and faster convergence than traditional single-optimizer training.

Training Progress

Watch for:

Step 0000 | train_loss: 11.2341 | val_loss: 11.1876

Step 0500 | train_loss: 8.4562 | CORE: 8.92%

Step 1000 | train_loss: 7.1234 | CORE: 15.43%

Step 1500 | train_loss: 6.5432 | CORE: 19.34%

Final | train_loss: 5.8765 | CORE: 22.19% ✓

Training loss decreases from ~11 to ~6, with CORE score improving to 22.19%.

Stage 3: Midtraining

Bridging Language to Conversation

Teaches the model:

- Chat format with special tokens

- Tool use (calculator integration)

- Multiple-choice reasoning

Training data:

- 50K conversations (SmolTalk)

- ~5K multiple-choice examples (MMLU, ARC)

- 5K synthetic calculator examples

Results:

- ARC tasks: 28-36%

- MMLU: 31.11%

- GSM8K: 2.50% (still weak)

- HumanEval: 6.71% (basic coding emerges)

Stage 4: Supervised Fine-Tuning

Learning to Follow Instructions

SFT uses selective loss masking—only training on assistant responses:

<|user_start|> Question <|user_end|> <|assistant_start|> Answer <|assistant_end|>

[-----ignore-----] [----------train on this----------]

Training data (~21.4K examples):

- ARC-Easy: 2,351

- ARC-Challenge: 1,119

- GSM8K: 7,473

- SmolTalk: 10,000

Performance gains:

| Metric | Before | After | Improvement |

|---|---|---|---|

| ARC-Easy | 35.61% | 38.76% | +3.15% |

| GSM8K | 2.50% | 4.55% | +2.05% |

| HumanEval | 6.71% | 8.54% | +1.83% |

Stage 5: Reinforcement Learning (Optional)

Enable RL to boost math performance:

# Uncomment in speedrun.sh

torchrun --standalone --nproc_per_node=8 -m scripts.chat_rl -- --run=$WANDB_RUNUses policy gradient methods (REINFORCE) to improve:

- GSM8K: 4.55% → 7.58% (+3.03%)

- Time: ~30-45 minutes

Interacting with Your Model

Command-Line Interface

source .venv/bin/activate

python -m scripts.chat_cliExample conversation:

You: Why is the sky blue?

Assistant: The sky appears blue because of a phenomenon called Rayleigh scattering. When sunlight enters Earth's atmosphere, it collides with air molecules. Blue light has shorter wavelengths and gets scattered more than other colors, making the sky appear blue to our eyes.

You: What's 15 times 23?

Assistant: Let me calculate that. <|python_start|>15 * 23<|python_end|><|output_start|>345<|output_end|> The answer is 345.

Web Interface (ChatGPT-style)

For a better experience, launch the web UI:

python -m scripts.chat_webThis starts a server on port 8000. If you're on a cloud instance:

- Find your instance's public IP

- Visit:

http://<your-public-ip>:8000

Features:

- ChatGPT-like interface (built with FastAPI + HTML/JS)

- Streaming responses (tokens appear as they're generated)

- Conversation history

- Adjustable temperature and top-k sampling

- Tool use visible (calculator results)

Understanding the Technical Innovations

The Muon Optimizer

One of nanochat's key innovations is the Muon optimizer (MomentUm Orthogonalized by Newton-schulz). Unlike traditional AdamW, Muon orthogonalizes gradient updates for 2D matrix parameters, providing:

- Better gradient flow through deep networks

- Faster convergence for matrix parameters

- Stability in low precision (BF16)

The algorithm performs Newton-Schulz iteration to compute orthogonalization, which runs stably in bfloat16 on GPU.

KV Cache for Efficient Inference

The inference engine implements sophisticated KV caching with:

- Lazy initialization: Cache created on first use

- Dynamic growth: Starts small, grows in 1024-token chunks

- Batch prefill: Prefill once, clone cache for multiple samples

- Efficient attention masking: Handle causal attention correctly

This allows generating multiple samples (for best-of-N sampling) without recomputing the prompt encoding.

Tool Use Integration

The engine implements a calculator tool via safe Python expression evaluation:

- Model generates

<|python_start|> - Engine collects tokens until

<|python_end|> - Decode tokens to string:

"123 + 456" - Evaluate safely:

579 - Force-inject result:

<|output_start|>579<|output_end|> - Continue generation

The model learns to invoke the tool, and the engine provides correct results.

Troubleshooting Common Issues

CUDA Out of Memory

Solutions:

- Reduce device batch size:

--device_batch_size=16(or 8, 4, 2, 1) - Train smaller model:

--depth=12instead of 20 - Reduce sequence length in

GPTConfig

Data Download Fails

Solutions:

- Retry download:

python -m nanochat.dataset -n 240 - Check existing shards:

ls -lh ~/.cache/nanochat/data/ - Download subset first:

python -m nanochat.dataset -n 50

Loss Not Decreasing

Debug steps:

- Verify data loading with a small test run

- Check learning rate is not too high/low

- Try smaller model for faster debugging:

--depth=6

Slow Training Speed

Expected: ~12s per step on 8xH100 for d20

If slower:

- Check GPU utilization:

nvidia-smi -l 1(should be ~95%+) - Monitor CPU usage for data loading bottlenecks

- Verify all 8 GPUs are active

Customization & Experiments

Scaling to Larger Models

Train a d26 model (GPT-2 level, ~$300):

# Download more data

python -m nanochat.dataset -n 450 &

# Increase depth, reduce batch size

torchrun --standalone --nproc_per_node=8 -m scripts.base_train -- \

--depth=26 --device_batch_size=16Expected results:

- CORE: ~29% (matches GPT-2!)

- Time: ~12 hours

- Cost: ~$300

Using Your Own Data

For pretraining:

- Format as plain text files (one document per line)

- Place in

~/.cache/nanochat/data/custom/ - Modify dataset loader to use your directory

For fine-tuning: Create a new task class and add it to the SFT mixture in the training script.

Single GPU Training

Remove torchrun, everything auto-adapts:

python -m scripts.base_train -- --depth=20Same final result, 8x longer time via automatic gradient accumulation.

Conclusion

Congratulations! In 4 hours and $100, you've accomplished something remarkable:

✅ Trained a custom tokenizer from 2B characters of web text

✅ Pretrained a 561M parameter transformer from scratch

✅ Fine-tuned through mid → SFT → RL pipeline

✅ Deployed a ChatGPT-like web interface

✅ Achieved measurable performance on standard benchmarks

✅ Understood modern LLM techniques at implementation level

What This Proves

LLM development is accessible. You don't need millions of dollars—just $100 and an afternoon. The nanochat project demonstrates that transparency and education matter more than scale. With ~8,300 lines of readable code, you can understand the full pipeline from tokenization through deployment.

Your Model's Capabilities

Your model can:

- Hold basic conversations

- Answer factual questions (~30% accuracy on MMLU)

- Solve simple math problems (with calculator tool)

- Generate basic code (8.5% on HumanEval)

- Write creative content

Is it GPT-4? No. But it's yours, and you understand exactly how it works.

What's Next?

Immediate steps:

- Scale to d26 for GPT-2 level performance

- Fine-tune on your domain-specific data

- Experiment with different architectures

- Share your results with the community

Longer-term directions:

- Multi-modal models (add vision)

- Longer context (8K+ tokens)

- Better RL (PPO, GRPO)

- Efficient deployment (quantization, distillation)

The Bigger Picture

The future of AI research shouldn't require million-dollar budgets. It should reward good ideas, executed well. nanochat is a step toward that future—a complete, transparent, accessible implementation of the techniques powering ChatGPT.

Fork it. Modify it. Break it. Improve it. Share what you learn.

And most importantly: you now know how to build ChatGPT.

Quick Reference

Full pipeline:

bash speedrun.shIndividual stages:

# Tokenizer

python -m scripts.tok_train --max_chars=2000000000

# Base training

torchrun --standalone --nproc_per_node=8 -m scripts.base_train -- --depth=20

# Midtraining

torchrun --standalone --nproc_per_node=8 -m scripts.mid_train

# SFT

torchrun --standalone --nproc_per_node=8 -m scripts.chat_sft

# Chat

python -m scripts.chat_cli

python -m scripts.chat_webMonitoring:

# Watch training log

tail -f speedrun.log

# GPU utilization

watch -n 1 nvidia-smi

# Disk usage

du -sh ~/.cache/nanochat/Happy training! 🚀

Questions? Open an issue on GitHub or start a discussion.

Want to share results? Tag @karpathy on Twitter.

Remember: The best way to understand LLMs is to build one yourself. You just did.

On this page

- Introduction

- What You'll Build

- The Investment

- Prerequisites & Setup

- Technical Requirements

- Compute Requirements

- Cost Breakdown

- Expected Performance

- Getting Started

- Step 1: Provision Your GPU Node

- Step 2: Connect and Clone

- Step 3: (Optional) Enable Logging

- Step 4: Launch Training

- The Training Pipeline

- Stage 1: Tokenizer Training

- What Happens

- Stage 2: Base Pretraining

- The Core Training

- Dual Optimizer Innovation

- Training Progress

- Stage 3: Midtraining

- Bridging Language to Conversation

- Stage 4: Supervised Fine-Tuning

- Learning to Follow Instructions

- Stage 5: Reinforcement Learning (Optional)

- Interacting with Your Model

- Command-Line Interface

- Web Interface (ChatGPT-style)

- Understanding the Technical Innovations

- The Muon Optimizer

- KV Cache for Efficient Inference

- Tool Use Integration

- Troubleshooting Common Issues

- CUDA Out of Memory

- Data Download Fails

- Loss Not Decreasing

- Slow Training Speed

- Customization & Experiments

- Scaling to Larger Models

- Using Your Own Data

- Single GPU Training

- Conclusion

- What This Proves

- Your Model's Capabilities

- What's Next?

- The Bigger Picture

- Quick Reference